Introduction

Singapore is becoming one of the first countries to mandate age verification at the app store level. They are also contemplating similar identification on social media websites. This could inspire other governments, especially in Asia and Europe, to adopt similar frameworks.

So what is the purpose of checking someone’s age? What are the drawbacks of such surveillance?

New Age Verification Measures

Singapore will soon implement age assurance requirements for app stores, and they are exploring similar measures for social media platforms. These measures aim to ensure that content is appropriate for users based on their age.

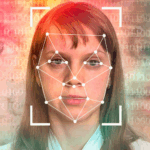

The new legislation requires that app stores (like Apple, Google, Huawei, Microsoft, and Samsung) must implement age verification methods by March 31, 2026. This may look like credit cards or SingPass numbers to ensure that users are over 18. Methods also include age estimation (using AI or facial analysis) and age verification (using official IDs or digital credentials) programs.

New Online Safety Legislation

A new Online Safety (Relief and Accountability) Bill is set for discussion in Singapore’s Parliament soon. This legislation, OSRA, aims to:

- Require platforms to remove harmful content quickly.

- Hold perpetrators and platforms accountable for online harms.

- Establish an Online Safety Commission to support victims and oversee compliance.

While the law intends to protect underage users, it also aims to curb the rise in harmful content on social media. From cyberbullying to inappropriate content to hateful speech directed at others.

Social media platforms like Facebook and Instagram were identified as major sources of such content, and it’s not just in one part of the world. Many users do not report potentially harmful posts due to apathy or their general lack of confidence in the reporting process, no matter where they live.

Other countries are developing their own legislation to handle online privacy and protection, including the Kids Online Safety Act (KOSA) in America and the UK’s Online Safety Act (2023).

Protecting Yourself and Your Children Online

Authorities emphasize the need for greater public education to raise awareness of online harms. In the meantime, parents should also get more involved in monitoring and guiding their children’s digital behaviors. Do research and collaborate with platforms that publicly aim to improve content moderation and age-appropriate exposure to certain information.

On the flip side, age verification methods—especially those using facial recognition or ID documents—raise questions about data privacy and surveillance.

As more people consider data privacy and a positive online experience, tech giants like Apple and Meta may face mounting pressure to standardize age assurance for all users, rather than customizing security per each country’s legislation. People will likely push for a balance between surveillance and safety, too.

Conclusion

As people and their governments grapple with the balance between user privacy and online protection worldwide, Singapore’s approach could serve as a blueprint for others seeking to safeguard young users in an increasingly complex digital landscape.

While these measures promise stronger protections against harmful content, they also raise critical questions about surveillance, data privacy, and the role of tech companies in shaping safe online environments. The challenge ahead lies in creating systems that are both effective and respectful of individual rights.

As the digital world evolves, so too must our frameworks for accountability, transparency, and care. Safety shouldn’t come at the cost of freedom, and privacy can’t be sacrificed for protection.

The post Singapore Mandates Age Verification and Online Accountability appeared first on Cybersafe.